Collect Once.

Activate Everywhere.

Replace fragmented SDKs with a single, real-time data layer for your Analytics, Ads and AI.

A Unified Platform for Real-Time Data Collection and Activation

Reduce complexity, eliminate technical debt, and ensure every team—from Marketing to Ad Ops to Engineering—works from the same trusted data.

Seamlessly track the entire user journey in and out of media players, manage data governance on the fly, and activate real-time experiences exactly where they’re needed.

One Source. Total Control. Zero Friction.

This isn't another analytics tool to manage—it’s the foundational data layer that makes all your existing tools better.

Effortlessly Capture and Standardize Every Interaction

Datazoom simplifies capturing and organizing behavior and provides an adaptable, actionable foundation.

SDKs for Every Platform, Media Player and Ad Framework

Hundreds of Pre-Instrumented Events and Metadata with Unlimited Custom Scripting

Built-in Hierarchical Sessionization for App, Content and Ad Sessions

Remote Governance and Data Optimization

Turn On or Off Collection Granularly

Use-Case Specific Sampling

Conditional Filtering Rules

Eliminate engineering delays with code-free updates. Empower teams while reducing infrastructure costs through precise, granular data controls.

Deliver and Activate Data Anywhere

Reformat and Transform Data by Destination

Secure Partner Syndication

Send to Any Destination Instantly

Sub-Second Latency

Drive immediate action across every tool. Securely syndicate data to partners and activate real-time experiences with sub-second delivery.

Introducing the Verified Session Token

Drive More Ad Revenue Than Ever Before

The Data Dictionaries

A Single Low Effort Integration Unlocks Hundreds of Standardized Data Points

-

Extensive device, location, network metadata for interaction events grouped by app, content and ad session IDs.

-

Page and asset information. Content interaction events (milestones, thresholds, heartbeats).

-

Ad break and ad milestone, skips, errors and click events with extended ad metadata for Google IMA, Google IMA DAI, Freewheel, MediaTailor and YoSpace support.

-

All video playback stages from start to finish, including buffering, errors, and quality changes with device, player, asset and network information

-

You can instrument any event or metadata to be included in the event stream (e.g. internal or third party user or content IDs / categories, user journey events, extended metadata).

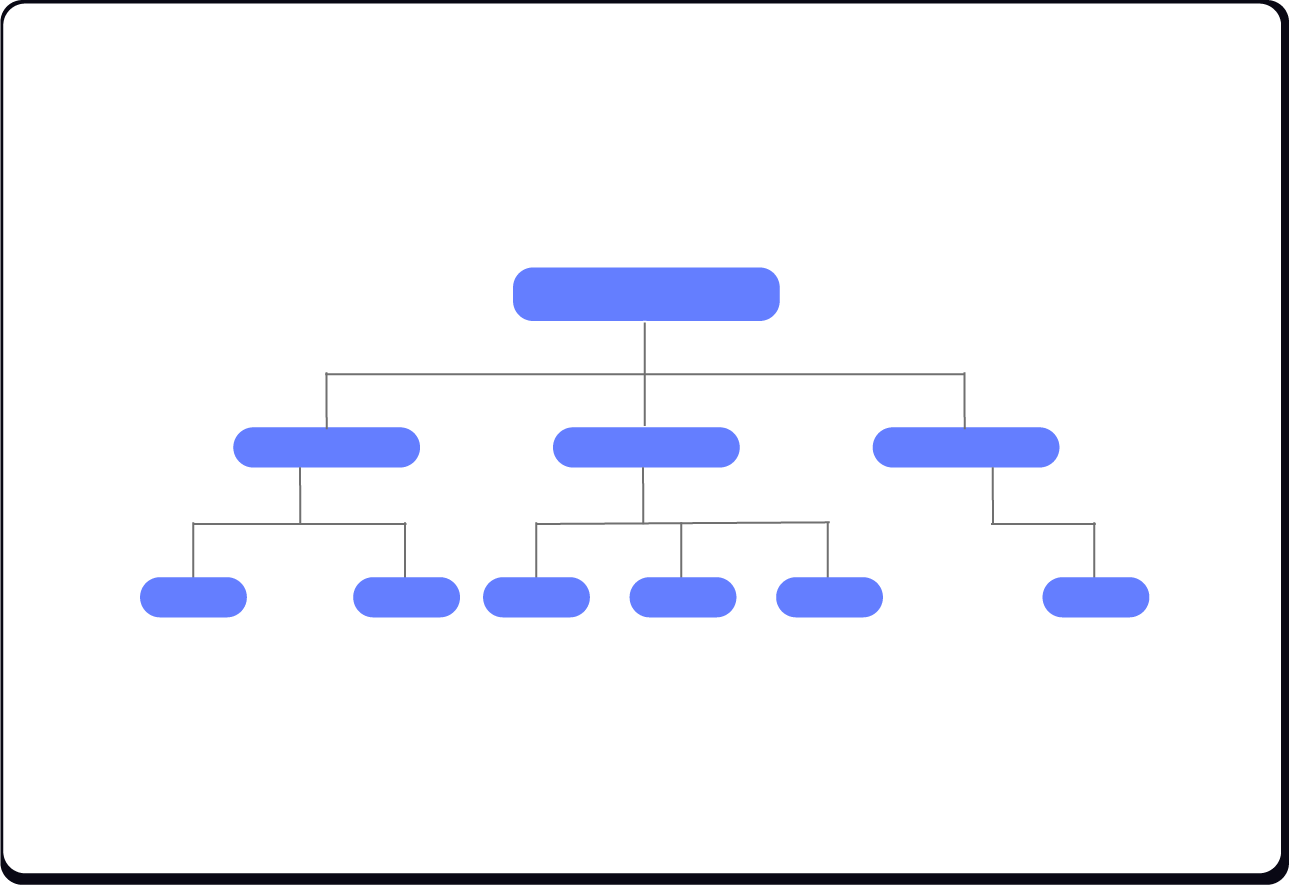

A Unified

Source of Truth

A unified data source for different teams to choose what data they need, how much, in what format and where to send it — whenever they want.

Highly Available, Performant, Scalable.

The Datazoom SDKs are dead simple to integrate, non-blocking, ultra-lightweight, and never compromise player performance or build stability.

The Datazoom Platform is fully built on AWS by industry veterans to provide financial-grade data services at virtually any scale.

Trusted Collaborators

Amazon Web Services

Coralogix

InfoTrust

TV Insight

Hydrolix for AWS

TrackIt

TrafficPeak by Akamai

CHECK OUT OUR LATEST RELEASES

React Native Collector

•

Remotely Instrument Custom Events & Metadata

•

Single Sign On (SSO)

•

Fully Tractable Custom Data

•

Partner Syndication

•

React Native Collector • Remotely Instrument Custom Events & Metadata • Single Sign On (SSO) • Fully Tractable Custom Data • Partner Syndication •

Community Resources

Book a Demo

Contact Us For a Demo of Our Real-Time Data Platform

Publishers (video, audio and text)

Ecommerce

Apps and games

Partners (ad, content, data solutions)

Press