Taming Large, Complex Data Sets with Transformations

Modify, enhance, or reformat collected data before it’s sent to downstream systems or connectors for analysis and reporting. Apply rules and calculations to transform raw data collected by Datazoom’s collectors into formats needed on egress.

- Home

- Transform

01

Dealing with data once it has arrived can present lots of challenges, from extended time for being able to use it to errors in calculations and other changes.

Data Transformation Challenges

Navigating Complexity and Scale

As data volumes escalate, the intricacy of data transformations can magnify significantly. The challenge lies in orchestrating and executing intricate transformations across massive datasets, often demanding substantial computational resources.

Ensuring Integration Compatibility

Data transformations must seamlessly meet the needs of downstream tools, databases, and systems.

Mitigating Costly Errors

The potential for errors in data transformation processes can lead to lost data, inaccurate outcomes, and delays in analysis.

Adapting Over Time

Data transformation requirements can evolve over time due to shifting business needs or regulatory changes. Companies must be adaptable without disrupting operations.

Data Protection Best Practices

Developing necessary expertise to create, optimize, and manage complex transformation processes and data governance practices takes time and effort.

02

The Datazoom DaaS Platform built-in data transformation features can provide significant business value.

Datazoom’s Key Capabilities for Data Transformations

Streamline Complex Transformations

Apply custom rules without the need for extensive coding, technical expertise, or large-scale data processing.

Get Compatible Data In Real-Time

Ensure compatibility with downstream systems without needing to wait for post processing.

Adaptability and Future-Proofing

Easily adjust transformation rules on demand.

03

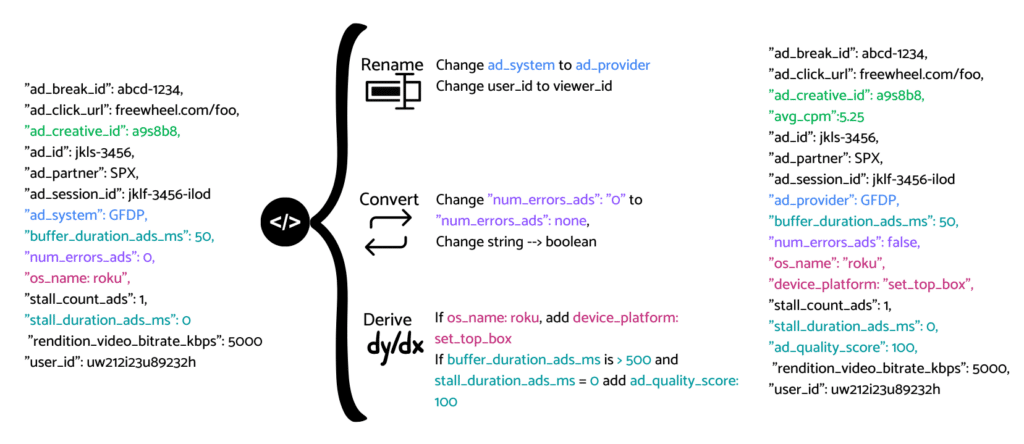

Data transformation can be carried out in a variety of ways through the Datazoom Platform: standardize naming conventions, converting data types, and even making changes based on the values of other data points.

Example Transformation Use Cases

Standardizing Naming Conventions

A connector’s data schema may require egress data to honor its conventions. Data transformations can be used to navigate disparate naming conventions. For example, convert “content_title” to “video_title” or “ad_system” to “ad_provider.”

Calculating Metrics On The Fly

Derive metrics like “ad_quality_score” from “buffer_duration_ads_ms” and “stall_duration_ads_ms” data points using transformation logic.

Data Type Conversion

If a video publisher collects “num_errors_ads” as a string, but to perform numerical analysis, it needs to be converted into an integer or float data type, data transformations can facilitate the conversion.